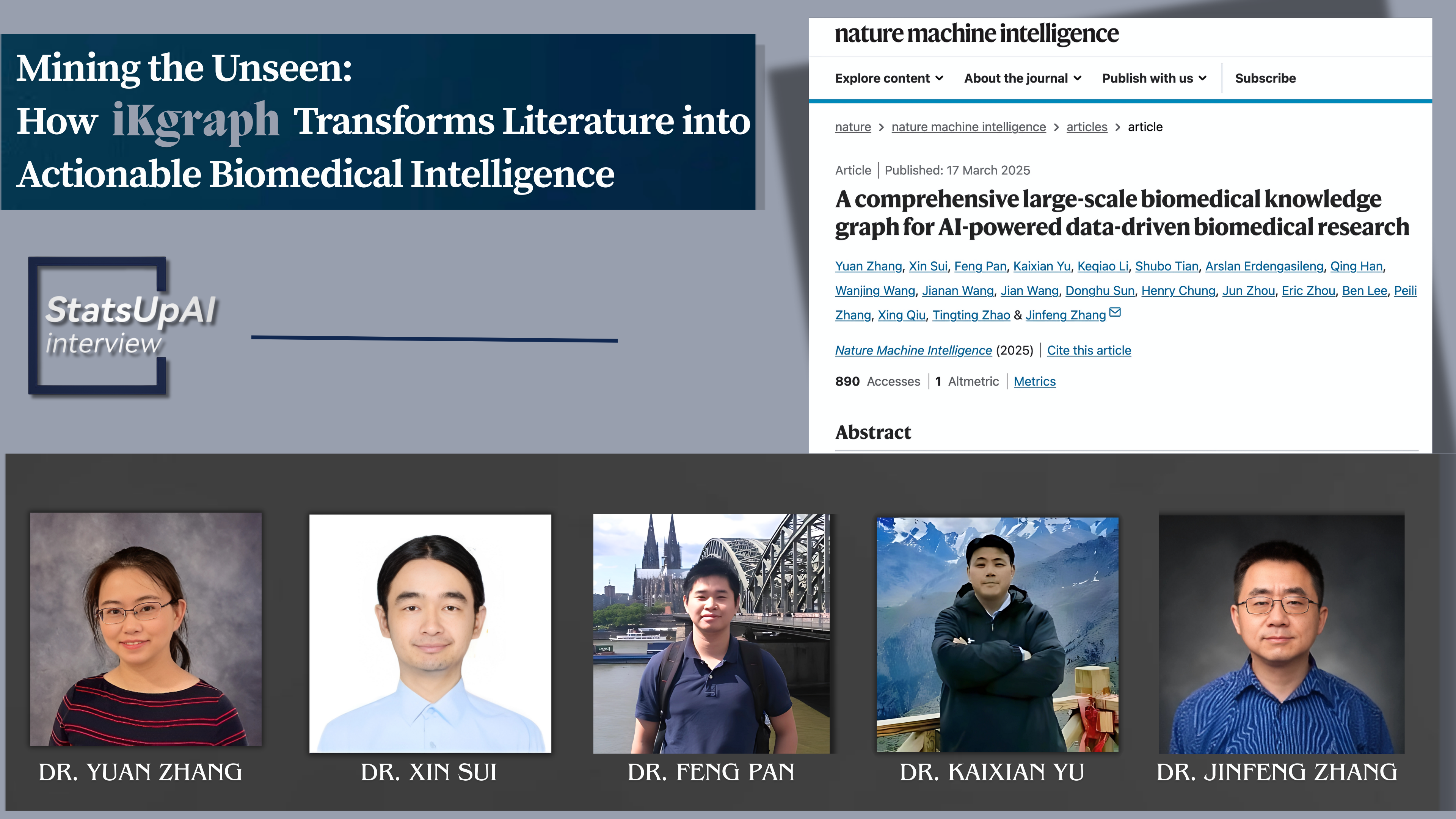

Mining the Unseen: How iKraph Transforms Literature into Actionable Biomedical Intelligence

The Article Link:

A comprehensive large-scale biomedical knowledge graph for AI-powered data-driven biomedical research

Dr. Yuan Zhang

Dr. Yuan Zhang, is the Chief Scientist of R&D at Insilicom LLC, leading the AI team in developing advanced AI models for biomedical research. With expertise in natural language processing, knowledge graph construction, and AI-driven drug discovery, Dr. Zhang has played a key role in building iKraph, a large-scale biomedical knowledge graph, and developing algorithms for adverse drug event monitoring and drug repurposing. Their work integrates AI, probabilistic semantic reasoning, and graph-based models to accelerate scientific discovery in the biomedical field.

Dr. Xin Sui

Dr. Xin Sui, researches Large Language Model applications in biomedical text mining at FSU. With a PhD in Statistics, he specializes in biomedical text mining and knowledge graph construction. A LitCoin NLP Challenge winner, his work focuses on extracting knowledge from scientific literature.

Dr. Feng Pan

Dr. Feng Pan, Associate Director of Data Science, Insilicom LLC: Expert in biomedical data processing, natural language processing and LLM prompting, with a proven track record as a key contributor to the LitCoin NLP Challenge and BioCreative competitions.

Dr. Kaixian Yu

Kaixian Yu, PhD in Statistics, currently serving as CTO at Insilicom LLC, previously an expert researcher at Didi Global's AI Labs, head of the community e-commerce search and recommendation system, and head of data science for growth at Shopee. Dedicated to applying statistical methods to industrial practices, with research areas including statistical learning, natural language processing, computer vision, graph neural networks, reinforcement learning, and causal inference. Published several papers in top-tier journals such as Nature and Cell, and served as a program committee member for leading conferences including KDD, IJCAI, and AAAI.

Dr. Jinfeng Zhang

Dr. Jinfeng Zhang obtained his bachelor’s degree from Peking University in Beijing, China in 1997. He then came to the US to pursue graduate studies at the University of Illinois at Chicago, where he obtained a master’s degree in chemistry (2001), master’s degree in mathematics and computer science (2002), and PhD in bioinformatics (2004). He then received postdoctoral training in the department of statistics at Harvard University during 2004-2007. He joined the department of statistics at Florida State University as a faculty member in 2007. His research encompasses applications of computational methods and artificial intelligence in biology, including biological information extraction, natural language processing, biomedical knowledge graphs, and AI for Science. In the summer of 2022, Dr. Zhang took a leave of absence from Florida State University to dedicate his time fully to his startup company, Insilicom LLC.

1. Regarding the research background and significance, does this work discover new knowledge or solve existing problems within the field? Please elaborate in detail.

The work both uncovers new knowledge and addresses longstanding challenges in biomedical research. Here are multiple perspectives:

1. Novel Discovery of Knowledge

The paper introduces iKraph—a knowledge graph constructed by processing all PubMed abstracts with a pipeline that achieves human-level accuracy. This effort goes beyond traditional manual curation by integrating 10+ million entities and over 30 million relations, thereby revealing novel associations and insights not captured in existing public databases. By aggregating and interpreting vast amounts of literature, the method uncovers previously hidden or underappreciated relationships (e.g., between diseases, genes, and drugs) that can inspire new hypotheses and research directions.

2. Solving Existing Methodological Problems

The study addresses a critical bottleneck in biomedical informatics: the extraction of structured, reliable knowledge from unstructured text. Traditional approaches struggled with scale and accuracy, but this work leverages advanced natural language processing and a winning pipeline from the LitCoin NLP Challenge to meet these challenges head-on. The development of the probabilistic semantic reasoning (PSR) algorithm is particularly noteworthy—it provides an interpretable framework for inferring indirect causal relationships, a task that has proven challenging with earlier methods. This directly tackles the problem of making sense of complex, multi-hop relations in biomedical data.

3. Impact on Applications like Drug Repurposing

The integration of diverse data sources and the ability to infer causal pathways allow the system to generate actionable insights, as demonstrated by its application in real-time drug repurposing studies (e.g., for COVID-19 and cystic fibrosis). This not only speeds up hypothesis generation but also improves the rigor of validation compared to traditional approaches. The work shows that structured, high-quality knowledge graphs can be directly used to predict and validate novel therapeutic candidates—bridging a gap that manual literature reviews and less sophisticated automated systems have struggled to close.

Overall, the study exemplifies a paradigm shift: it both discovers new biomedical insights by mining and integrating massive amounts of data, and it provides robust solutions to longstanding challenges in automated knowledge extraction and hypothesis generation.

2. How did the reviewers evaluate (praise) it?

The reviewers were generally very positive about the work, highlighting several aspects that set it apart:

1. Scale and Integration

They praised the sheer scale of the knowledge extraction—processing over 34 million PubMed abstracts and integrating data from 40 public databases and high-throughput genomics analyses—which provides a much richer and more comprehensive resource than previous efforts. The integration of diverse data sources was seen as a major strength, establishing a solid foundation for advanced biomedical insights.

2. Methodological Advances and Interpretability

Both reviewers commended the use of an advanced information extraction pipeline (the LitCoin Challenge-winning system) that achieves human-level performance. The development of the probabilistic semantic reasoning (PSR) framework was especially highlighted for its interpretability. Unlike many “black-box” models, PSR allows experts to trace the reasoning behind inferred indirect relationships, which is critical for applications such as drug repurposing.

3. Impact on Drug Repurposing and Therapeutic Discovery

The system’s performance in drug repurposing studies—demonstrating high recall rates in case studies for COVID-19 and cystic fibrosis—was seen as compelling evidence of its practical utility in accelerating therapeutic discovery. The retrospective validation showing that many viable drug candidates could have been identified early (for example, during the COVID-19 pandemic) impressed the reviewers, suggesting real-world impact.

4. Overall Contribution to Biomedical Research

Reviewer #1 noted that while no single element might be entirely novel, the integrated system as a whole represents a significant advancement in automated biomedical knowledge extraction and inference. Reviewer #2 emphasized the KG’s value for the biomedical community, especially given that many existing KGs are outdated, and appreciated the efforts to provide both a server interface and downloadable data.

In summary, the reviewers evaluated the work as a credible and meaningful step forward in biomedical informatics—combining scale, methodological rigor, and interpretability to deliver a resource with clear applications in accelerating research and drug discovery.

3. If this achievement has potential applications, what are some specific applications it might have in a few years?

The achievement paves the way for several transformative applications in biomedical research and healthcare. For instance:

1. Drug Repurposing and Therapeutic Discovery

The system’s ability to infer indirect causal relations can be further refined to rapidly identify novel drug candidates or repurpose existing ones, particularly for emerging diseases. Future iterations could integrate real-time data feeds to continuously update predictions, enabling faster response during health crises.

2. Precision Medicine and Patient Stratification

With a comprehensive view of gene-disease-drug associations, clinicians could better tailor treatments to individual patients by identifying molecular targets specific to a patient’s condition. The knowledge graph could be integrated with patient genomic data to predict treatment responses and potential side effects.

3. Automated Hypothesis Generation for Research

Researchers can use the system as an automated assistant to generate and prioritize new scientific hypotheses, highlighting underexplored biological pathways or novel interactions. This could accelerate basic research and drive the discovery of previously unknown mechanisms of disease.

4. Enhanced Clinical Decision Support

The KG can serve as a backbone for decision support systems in clinical settings, providing clinicians with evidence-backed insights drawn from vast amounts of literature. For example, it could aid in diagnosing complex conditions by linking clinical symptoms with underlying molecular mechanisms.

5. Integration with Emerging AI Models

As large language models continue to evolve, combining them with a robust, data-driven KG like iKraph could enhance the accuracy and interpretability of biomedical AI tools, leading to smarter clinical and research applications.

These applications underscore the long-term potential of this achievement to not only transform biomedical research but also to directly impact patient care and healthcare innovation.

4. Can you recount the specific steps or stages from setting the research topic to the successful completion of the research?

1. Historical Foundation and Early Vision

We began with a long-held vision of using knowledge graphs for automated knowledge discovery. Our journey started in 2011 with our PLOS One paper, “Integrated Bio-Entity Network: A System for Biological Knowledge Discovery” , which laid the conceptual groundwork for today’s approach—even though back then we did not use the term “knowledge graph.”

At that time, our extraction methods were more rudimentary, and the quality of the information extracted was not comparable to what is achievable now.

2. Advancements in Technology and Methodology

With the emergence of deep learning and large language models (LLMs), we revamped our approach by developing an award-winning, human-level information extraction pipeline. This new system could accurately extract multiple critical biological entities and their relationships from vast datasets, enabling us to construct a comprehensive, high-quality knowledge graph.

Recognizing that understanding causal directions is key for generating actionable hypotheses, we took an extra step to manually annotate causal information and build a causal knowledge graph.

3. Algorithm Development and Validation

A pivotal stage was the creation of our probabilistic semantic reasoning (PSR) algorithm. This probabilistic framework allowed us to infer indirect causal relationships at scale, generating interpretable hypotheses from our graph.

We also implemented a time-sensitive validation strategy. By retrospectively testing our generated hypotheses against evolving data, we rigorously demonstrated the effectiveness and robustness of our overall approach.

Each of these stages—from the initial conceptualization to technological enhancement, algorithm development, and rigorous validation—played an essential role in the successful completion of the research.

5. Were there any memorable events during the research? You can tell a story about anything related to people, events, or objects.

Our journey was full of ups and downs that ultimately enriched our research story:

We initially submitted the manuscript to top-tier journals like Nature and Science, only to face rejections without even reaching peer review—and Nature Biotechnology also passed us by. That period was very disheartening, and for a while, we felt too frustrated to continue submitting to similar outlets.

However, rather than giving up, we decided to persevere and submitted the work to Nature Machine Intelligence. This time, our efforts paid off, and the manuscript was accepted—a turning point that reaffirmed our belief in the value of our approach.

Along the way, one of the most memorable surprises was discovering that our method could repurpose a remarkably large number of drugs and diseases, each backed by substantial literature evidence. Reading through these supporting pieces of evidence made us realize the immense potential of our work.

At the same time, the process also revealed a critical insight: while our predictions were exciting, many repurposed drug candidates were not ideal due to simple, overlooked factors. This observation has already set the stage for our next phase of research, where reducing the false positive rate will be a key focus.

Earlier milestones, such as winning the LitCoin NLP Challenge and BioCreative challenges, also played pivotal roles in building our confidence and shaping the direction of our study. These successes, along with many precious memories from our collaborative efforts, continue to inspire us—even if I won’t elaborate on all those details here.

This rollercoaster of rejections, surprising discoveries, and key milestones not only defined our research journey but also reinforced the resilience and creativity required to drive innovation in biomedical knowledge discovery.

6. Is there a follow-up plan based on this research? If so, please elaborate.

We are applying the framework to perform a large-scale drug repurposing effort for all rare diseases. We have selected a set of rare cancers and conducted experimental validation of repurposed compounds on some rare cancer cell lines and obtained very promising results.

Another line of research is to use the knowledge graph to develop advanced link prediction methods to predict new drug targets, new treatments for diseases, and hypothesis generation in general.

We are building a question-answering system by combining a LLM with our KG and are also integrating knowledge from analyzing large volumes of public genomics data.

7. Without a doubt, AI is one of the hot topics of 2025, requiring extensive data support in its development. What assistance can biostatistics offer to the development of AI?

Biostatistics plays a pivotal role in powering AI developments, especially in data-intensive fields like biomedicine. Here are several ways it can contribute:

1. Robust Data Analysis and Quality Control. Biostatistics provides the rigorous methodologies needed to clean, integrate, and interpret complex datasets. This is crucial for ensuring that AI models are built on reliable and high-quality data.

2. Experimental Design and Sample Size Estimation. In designing studies or experiments for AI research, biostatistical methods help determine optimal sample sizes and experimental protocols. This ensures that the collected data is both representative and statistically robust, reducing bias and enhancing model generalizability.

3. Uncertainty Quantification and Model Validation. Biostatistics offers techniques for quantifying uncertainty, which are essential for assessing the reliability of AI predictions. By incorporating confidence intervals, hypothesis testing, and significance testing, researchers can better validate their models and results.

4. Causal Inference and Interpretation. Many AI applications, especially in healthcare, require not just correlation but a deep understanding of causality. Biostatistical tools enable causal inference, allowing researchers to identify and validate the underlying relationships between variables—a key aspect for interpreting AI outcomes.

5. Development of Predictive Models. Statistical models such as logistic regression, survival analysis, and Bayesian inference provide foundational tools that have been extended into modern AI. These methods help in the development and refinement of predictive models, serving as a bridge between traditional statistical methods and cutting-edge machine learning techniques.

6. Evaluation and Optimization of AI Systems. Biostatistics contributes to the evaluation of AI systems through the development of performance metrics and validation frameworks. This ensures that AI models are not only accurate but also robust and reproducible across different datasets and conditions.

In summary, biostatistics brings rigorous quantitative methods and a deep understanding of data variability and uncertainty, which are indispensable for the development, validation, and interpretation of AI systems.

Proofread by: Hongtu Zhu