Why Are We Here?

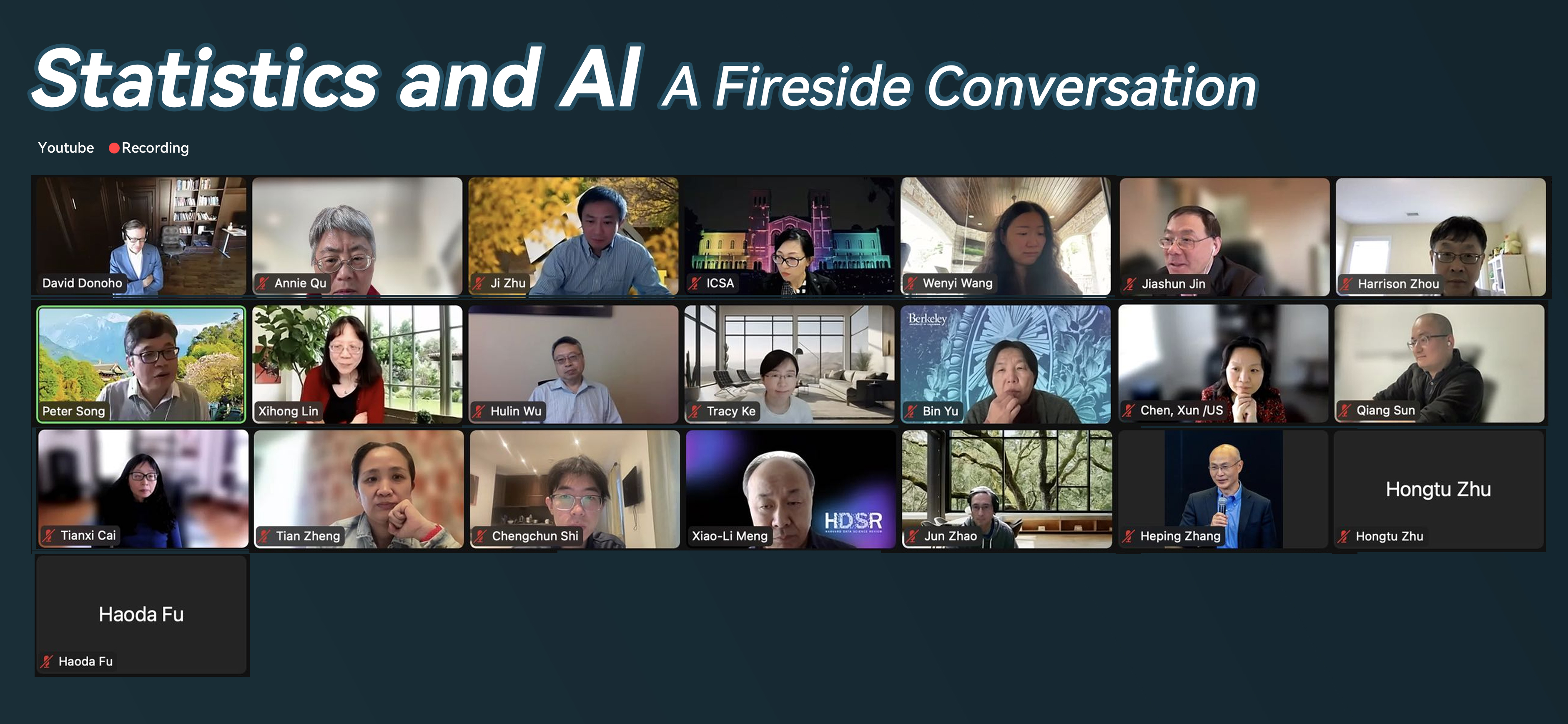

Recent fast developments in AI have led to excitement across the entire research community. Statistics is no exception. Many statisticians are feeling both excited and apprehensive about what this new era of AI implies for the future of Statistics and how statistics can contribute to empowering trustworthy AI. Informal discussions have taken place everywhere, online and offline. To leverage this energy and identify actionable initiatives for our discipline, Stats Up AI and ICSA organized a fireside chat on March 17 on "empowering statistics in the era of AI" with three panels of thought leaders, hoping to drive more community-level efforts to empower statistics and statisticians and make an impact in these exciting times of AI.

Tian Zheng initiated the proceedings with an expression of gratitude towards the association and the esteemed panelists, underscoring the importance of their insights and indicative of the topic's significance. Following Tian Zheng's opening remarks, Xihong Lin delved deeper into the essence of the fireside chat, emphasizing the critical role of statistics in comprehending and influencing both scientific research and societal decision-making in the age of artificial intelligence. Lin outlined the event’s structured approach, encompassing three focused sessions on the challenges and opportunities in statistical theory, methods, and application research in the AI era.

Fireside Agendas

Introduction: Tian Zheng (Columbia University); Opening: Xihong Lin (Harvard University)

The challenges and opportunities for the statistics coumminity in the era of AI

The first session of the conversation is on the challenges and opportunities for statistical theory and methods in the era of AI. Hongtu Zhu from UNC-Chapel Hill’s discourse further illuminated the pressing challenges and burgeoning opportunities at the intersection of statistics and AI, emphasizing the transformative impact of academic innovations like ImageNet and convolutional neural networks. Jiashun Jin from Carnegie Mellon University shared insightful observations and suggestions regarding AI and theoretical statistics based on his academic experience and collaboration with Google researchers. Tianxi Cai from Harvard University discussed the vast opportunities and pressing action items in the field of statistics, particularly in the context of AI's growing influence and the increasing amount of complex data. By reviewing the development of certain fundamental deep learning algorithms, Haoda Fu from Eli Lilly emphasized the need for Architecture-Algorithm Co-Design thinking, identifying four areas for development: low-level programming, data structures and algorithms, optimization, and design patterns. Tracy Ke from Harvard explored the intersection and distinct roles of statistics and AI, sharing an enlightening experiment on topic modeling using Large Language Models. Ke highlighted the clear role of theory in statistics to inspire new methods and identify method limitations in AI. Harrison Zhou from Yale explored the relationship between statistical theory, AI foundations, and their applications in data science. He also suggested incorporating AI researchers into editorial boards of journals and encouraging junior faculty to publish in top AI conferences, emphasizing the need for statisticians to be part of the AI evaluation process. David Donoho, in his discussion, provided a reflective view on the evolving landscape of statistical decision theory, AI, and their applications in data science. He discussed the perceived threat of AI overshadowing statistics, suggesting that statisticians should adopt a more welcoming approach towards AI, perhaps even "wearing AI T-shirts," to foster participation and collaboration.

See the video

Publication process of statistics in the era of AI

The second session of the conversation focused on how to improve the publication process in the era of data science and AI. Annie Qu as the current editor of the Journal of American Statistics Association (JASA) highlighted the problem of slow journal publication, particularly problematic in the rapidly advancing field of AI. She advocated for early rejection of papers with little potential and proposed rewarding diligent associate editors and referees to encourage better and faster reviews. Announcing a special issue in JASA on science and AI, Annie expressed hope for fostering the integration of statistics and AI to advance scientific discovery. Following Annie Qu's discussion on the publication process, Ji Zhu as the editor of Annals of Applied Statistics (AoAS) emphasized the importance of innovation not just from the authors' standpoint but also from the perspective of the journal editors, associate editors, and reviewers. He floated the idea of open reviews post-acceptance to foster discussion and increase visibility. He advocated for dual publications to make significant impacts, suggesting that discoveries be published in domain-specific journals while sophisticated statistical modeling is featured in statistical journals. Chengchun Shi compared the publication processes between statistical journals and machine learning conferences. He proposed several changes to improve the efficiency of the statistical publication process, including short review cycles, reducing the number of rounds, increasing number of references, and broadening the scope of journals. Xiao-Li Meng from Harvard shared his thoughts on the three Ps for statistical journals. He suggested statistical journals to be more Proactive in directing statistical research within the data science ecosystem; he stressed Promoting communication with the broader data science community; he also highlighted the need for journals to focus more on the data science Process rather than just the end products. Following these discussions, David Donoho provided a reflective critique on the evolution of statistical publication and research in light of the rapid changes brought by advancements in computer science and artificial intelligence.

See the video

Advancing statistical next generation pipelines and resources in the age of AI

The third session centers on statistical education in the age of AI. Wenyi Wang from MD Anderson compared statistics and computational biology in attracting students and fundings. She emphasized the crucial role of statistics in the age of AI and called for better leadership training in statistics and efforts to attract more talents to the field. Hulin Wu addressed the pressing question: Should statistics training expand to encompass data science and artificial intelligence (AI)? He discussed the current transition from statistics to data science and highlighted the challenge of balancing expansion into new fields while preserving statistics' distinct identity and principles. Qiang Sun discussed the evolution of statistical training in the era of data science and AI. He pointed out the discipline's shift towards production, emphasizing that statistical work must translate into products for the greater benefit of science. Dr. Sun proposed a bold reimagining of the curriculum to include deep learning, AI, and essential engineering skills and advocated for a more flexible and inclusive mindset in faculty and student recruitment. Bin Yu discussed the evolving nature of statistics in relation to data science and AI, emphasizing the interdisciplinary nature of these fields. She suggested to integrate machine learning as part of statistics curriculum to tackle the emerging challenges in AI, particularly focusing on trustworthiness, safety, and alignment with human intent. Highlighting the changes in academic courses over the past decade, Dr. Donoho pointed out shifts from traditional subjects to more current topics like machine learning. This signifies a transformative period for educational curriculums across mathematics and computer science departments. In conclusion, Dr. Donoho and fellow panelists advocated for proactive efforts to remodel statistics education to meet the demands of a rapidly changing world while maintaining the discipline's fundamental principles.

See the video